When Past Meets Present

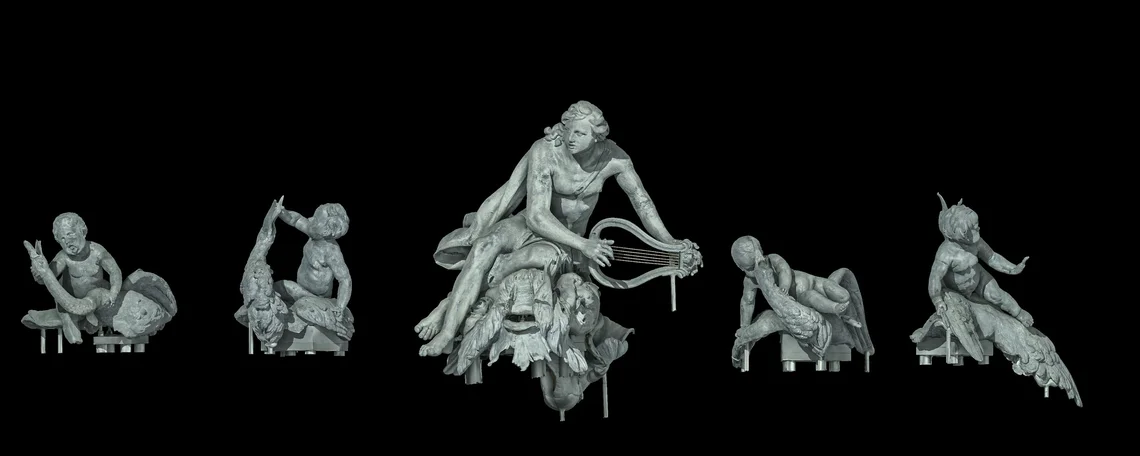

Schwetzingen Palace (Schloss Schwetzingen) is known for the beauty and harmony of its gardens. There, in the center of the circular parterre, stands the Arion Fountain. The scene portrays the shipwrecked singer, Arion, as he is being saved by a dolphin of Apollo. He is accompanied to shore by four putti. These five sculptures were created by Barthélemey Giubal in the early 18th century for the gardens of Lunéville Palace (Château de Lunéville) but were brought to Schwetzingen in 1766 by Prince Elector Charles Theodore.

The Arion Fountain in Schwetzingen Palace: bronze copies of the original lead statues

Now, after a couple of centuries, these sculptures are making the journey home—as 3D scans. Benjamin Moreno and Vincent Lacombe began the thorough, meticulous process of scanning each statue.

Scanning the original Arion, stored under proper conditions

Scanning one of the accompanying putti

These scans will serve as templates from which the sculptures will be reconstructed in Lunéville. This was a special treat for us, as your VG Storytellers had the opportunity to watch the scanning process up close and personal in Schwetzingen!

Join us as we interview Benjamin Moreno, co-founder of IMA Solutions and 3D visualization specialist for medicine, science, and cultural heritage!

Benjamin Moreno scanning one of the putti

Thank you for joining us. We had a great time seeing the process up close in Schwetzingen! Before we begin, can you tell us a bit about yourself and how you came across museums as an area of 3D scanning?

Of course. I was a biology student, back in 2005 or 2006, and I remember noticing a lack of new technology in visualization and data processing in the cultural heritage field, especially for museums and archaeology. See, in biology and the medical area, we had CT scan technology available, like scanning electron microscopes, even synchrotron or other huge equipment.

The idea behind IMA Solutions was to provide cultural heritage institutions access and knowledge to use this high-end tech to better understand, visualize, and analyze artwork or fossils from archaeological sites. I founded it in 2008, so 13 years ago, and directly started to work with the British Museum on a worldwide exhibition called "Mummies: The Inside Story." It was a mummy of Nesperennub, who was a priest from the Temple of Karnak, and the goal of this exhibition was to provide a 20-minute IMAX movie with 3D visualizations that explored the inside of the mummy.

VGSTUDIO MAX was the best in terms of rendering and data processing. I think I started working with the 1.2 version in 2006, and then this project was performed using 2.0 or 2.1.

"Mummy: the inside story" by Benjamin Moreno. And look who's narrated!

And that's how you started getting into museums and cultural institutions?

Yes, I started working with the British Museum, and then other museums asked us to work on their collections. From that point, we were integrating new technologies like long-range 3D scanning, and short-range 3D scanning, as you saw in Schwetzingen, to keep up with the projects. We were working on artwork from a few millimeters in size to complete archaeological sites that were several square kilometers.

Understanding that this technology is, well, not really used in museums, there were some requests to get access to these tools. And since then, we've continued working for the British Museum.

Any new projects going on?

There is a new exhibition in Japan, Tokyo, of six mummies, and all the renderings and visualizations were made using VGSTUDIO MAX. It will go to Kobe, Japan, and after that a few venues in Spain.

So quite international!

Yes, we also worked for the Louvre in Paris, and Torino, Italy. Also South Africa, for science, where we used micro CT and VGSTUDIO MAX for the renderings. So we understood there was a need for these institutions to put a step forward into the future, the future of understanding collections. And of course, they are great tools for providing the audience with these new discoveries in an interactive way.

So let's talk a bit about this project between Schwetzingen and Lunéville. You made 3D scans, but what did people use to do back then, before this technology was available?

Many copies were made using classic plaster molding and silicone molding, so they made actual physical models. But that causes problems, because you end up with deformations that are caused by weight. And I am often not able to see on the copy if they made modifications on the shapes to correct this or not.

And with 3D scanning it's different?

On the digital copies, we can and often have to correct this kind of issue. We have to fill the holes and cracks and try to correct the shape because of the weight of the sculptures. We do this with software; in hard cases, I like to use freeform modeling software, where you can feel the model and have all the tools for classic sculptures and deformations.

Rendering of the Arion's surface mesh

Is that what the scan data of the Schwetzingen sculptures will be used for?

Yes, as I mentioned, the main goal of the project is to make digital corrections. We found defects on the original sculptures, like the cracks and holes caused by the weight, so we have to correct them on the digital model. The corrected model will be used for creating molds to make a copy in bronze.

The second goal is to get a digital clone for preservation so we can keep a good record of the sculptures for future use. You can use the 3D models to virtually recreate the fountain and check the exact positions, make a composition in three hours, easy, and try to connect them in a good way. You can easily test different iterations of positioning the sculptures.

And there are discussions on whether to provide them digitally on a platform like Sketchfab so that everybody can look at the fountain from home.

A crack in the dolphin's mouth

A crack in Arion's coat

Were there any challenges you faced with scanning huge cultural artifacts?

Yes. You usually have some challenges regarding the material; typically, you face issues with very shiny surfaces or semi-transparent or transparent objects. That is purely an optical problem, but it's an issue for all scanners on the market, because they're all based on optical measurements.

We had to work on a crystal vase from Egypt, and of course, we couldn't scan it with a classic optical scanner. We used micro-CT to get the shape, and after that, we recreated the material and textures from the images. You can get your 3D model with high accuracy.

Then you have other issues, like very big objects or places to scan. Mixing different technology, like photogrammetry, laser scanning… so you're going to experience potential issues with processing a very large amount of data. But mainly, the problem is specific surfaces on objects, like translucency or reflectivity. 95 percent of the time, it works, because hardware is getting better and better.

Let's talk segmentation. You work with a lot of segmentation and rendering on VGSTUDIO MAX. Any favorite tips or tricks you'd like to share with our users?

You're right, 80 percent of the work is segmentation, and 20 percent is rendering. I really like working with VG software because of its robustness. You can work on a huge quantity of data without having to worry about crashes, which is very unpleasant in other programs.

I really like the latest addition, ambient occlusion. Especially for models like the sculptures in Schwetzingen, because we are directly working on mesh 3D models. It gives a better perception of volumes and shapes without losing interactivity, because you don't have to activate the shadowing. It's a very good enhancement of the application and a very interesting new feature.

Ambient occlusion off vs on

As for tips on segmentation—well, that is a bit difficult. As you can imagine, with cultural artifacts, a lot of segmentation is done by hand. The materials are mixed, and they are not as clear as for industry parts, like plastic and metal. So it can be useful to create a second data set via copy-paste and use hardening filters, like adaptive Gauss.

A good tip, I would say, is to perform segmentation and create ROIs on the filtered data, and then copy-paste the segmentation to your original data sets. You can work faster that way.

What about rendering?

For rendering, I really like to make animations where you mix Phong rendering with X-ray, and try to make some post-production mixing. It could work very well to identify and visualize some structures.

We actually used that for the last exhibition in Tokyo, from the British Museum. We had a sarcophagus made of more than 150 wooden pieces, and we performed segmentation of each part. You have some kind of plaster with painting and decorations, and we mixed Phong rendering, exploding all the parts. Then we put the parts back together again and, in the same animation, went into X-ray view, so you could see all the interfaces between the wooden planks. That's two animations rendered with Phong and X-ray rendering to make more appealing visualizations. I personally go for photorealistic renderings, since we work for museums. They usually like to be as close as possible to the original.

Before rendering compared to after rendering (texture, material and lighting)

Do you have a favorite segmentation tool?

3D polyline. It saves a lot of time, especially in very complex data, like organic shapes—stuff like that. You can quickly manipulate your object, cut around it, and remove a lot quickly in the 3D view. It is very efficient.

We imagine this project will also involve reverse engineering to get the CAD models of the sculptures. Do you work with reverse engineering for other projects, like in AnatomikModeling SAS?

Yes, I use them for medical projects. But not for thoracic implants but for our tracheal implants. We use CAD software to add specific structures that have to be very precise in terms of dimensions. For instance, for studs for radial pack components inside the medical device. We use CAD software to add distance where we want.

We only extract with VG to create the virtual model of the patients. Then it becomes a matter of pure segmentation for different tissues of interest, like bones, muscles, etc. Then we export the STL files to the software. Our tools of design are basically organic; we do not create the CADs directly. We use organic shaping tools to create our implants, and once the shape is created, we use a kind of surfacing algorithm to create the CAD model.

Last question. At IMA Solutions, you work with the past, and at AnatomikModel SAS, you work with the present. How do you think CT technology will change the future?

I definitely think CT technology is becoming more and more precise. Let me give you an example from our Egyptian collections.

We are working virtually to unroll some papyrus. When we CT scanned a young girl—an Egyptian mummy—we found there were a lot of papyrus rolls. We can see there is something in the rolls, but we are not able to see what the inscriptions are. But in the future, I'm sure that the accuracy of CT technology will be good enough to read the glyphs. It's just a question of time.

And from a medical perspective, of course, in the diagnostics of pathologies and medical disease, or for designing customized medical devices. In the future, these two domains will gain a lot in terms of product quality. And accessibility of micro-CT nearby is a very good point.

I remember that 15 years ago, that just having access to a micro-CT was already quite difficult. Actually, just in Toulouse, you just had five or six in public laboratories and private companies. Now you can easily find micro-CT equipment, or medical CT equipment. So the technology is spreading, and with it the access to this technology, which is interesting.

Thanks again to Benjamin Moreno for joining us from IMA Solutions.

Arion playing the guitar and riding an animal that the artist thought dolphins looked like.

Ready to Learn More?

Users of VGSTUDIO MAX can find out more about segmentation, rendering, and filters in the tutorials included in the software.

Got a Story?

If you have a VG Story to tell, let us know! Contact our Storyteller Team at: storytellers@volumegraphics.com. We look forward to hearing from you.